Introduction

Few industries and technologies have seen the kind of growth and change that AI has experienced in the past months. With the advent of Generative AI, this landscape has made a major shift. Let’s try and understand what makes GenAI different from its predecessors.

Imagine you are back in school. Every subject—whether it is math, biology, history, or literature—has its own dedicated teacher, an expert in their specific area. When you need help with geometry, you go to your math teacher; for chemistry, you head to the lab with your science teacher. This is how we have always understood learning—specialized knowledge in specialized hands.

But now, let us imagine something extraordinary. Picture a scenario where one teacher could answer all your questions, regardless of the subject. Whether you are solving complex equations or analyzing Shakespeare, this teacher has all the answers, ready and available at any time. No longer do you need to run from classroom to classroom. Instead, you have a single go-to mentor who understands every topic deeply and can explain it clearly, whenever you need.

This, in essence, is what advancements in GenAI have brought to the table. Think of it as an all-knowing teacher, capable of handling every query, breaking down complex problems, and providing real-time solutions across multiple domains. It is a shift in how we access expertise—no more silos, no more limitations.

The World Before GenAI

Let’s take one field—Natural Language Processing, also known as NLP. It is a branch of Artificial Intelligence that enables computers to understand and process text similarly to how humans do. NLP encompasses several subfields, such as:

- Sentiment Analysis: This involves extracting sentiment from text, identifying whether it is positive, negative, emotional, etc.

- Named Entity Recognition (NER): This subfield focuses on identifying and classifying named entities in text into predefined categories such as people, organizations, locations, and more.

There are many other subfields as well.

In conventional AI, each of these tasks requires a specialized model designed for a particular domain. For instance, if we wanted to perform sentiment analysis, we would train a specific model with relevant data to carry out that task. Similarly, if we needed named entity recognition, we would train a separate model specialized for that purpose.

The GenAI Revolution: What’s Different Now?

With the advent of large language models (LLMs), everything changed. Now, we have a single model capable of performing multiple tasks—sentiment analysis, named entity recognition, summarization, and much more. Think of it as a magic Pandora’s box: simply ask what you want, and it delivers, without needing the extensive training, testing, and modeling steps that were once required. This is how the entire field transformed with the introduction of ChatGPT.

Isn’t it fascinating, and at the same time baffling, that such a powerful tool exists, capable of doing almost everything without specialized training? Now, let’s dive a bit deeper to understand how this works.

World of Generative AI & Foundation Models

It was 30 November 2022. OpenAI launched ChatGPT to the public and in the following weeks, the whole world went berserk. What is this ChatGPT and why did it create such a furore?

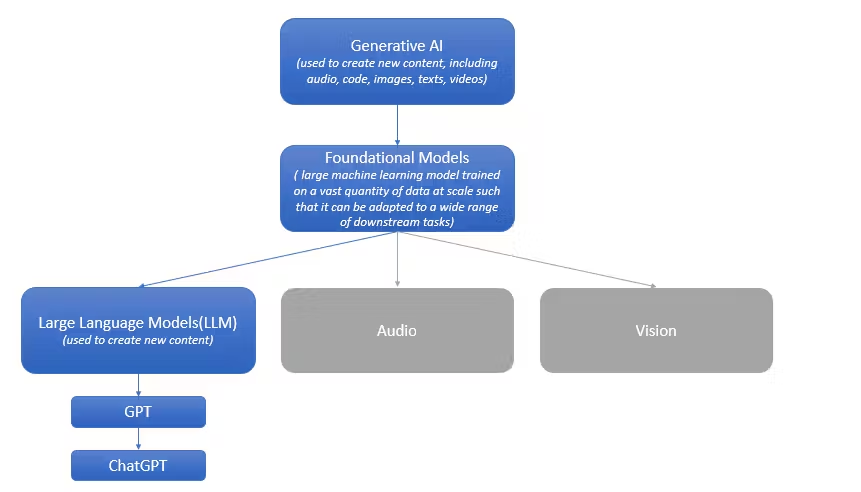

To begin with, ChatGPT is an LLM. To understand this we have to go one step back. LLM’s are part of a model family called Foundation Models.

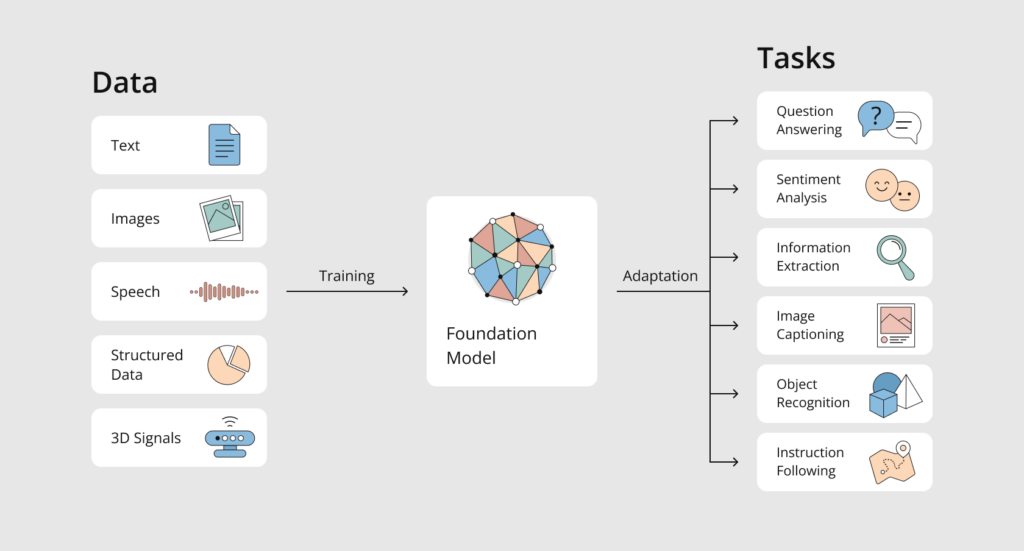

As discussed, earlier conventional AI models were built by training a model with each task-specific data for a very specific task (like sentiment analysis or entity recognition). Then we started moving on to a new paradigm where we would have a Foundation Model that can do any of these tasks and also can be transferred to any other task.

How is it able to do that? It’s because this model is trained on a huge amount of unstructured data in an unsupervised manner. We are speaking terabytes of data here. These Foundation Models are part of the field of AI called Generative AI. This refers to generating something new. So, at the core, the foundational models are trained to perform a generative task like predicting the next word in a sentence. We actually can take this model and introduce a small amount of variable data we can tune them to perform any NLP task. For example, we can prompt it to do a classification or a summarization.

Now, let’s try to understand LLM, GPT and ChatGPT

Large Language Models: The Backbone of GenAI

Before understanding LLM we need to understand Transformers. There was a paper ‘Attention is all you need’ which revolutionized natural language processing. It introduces the transformer architecture, which has since become the foundation of state-of-the-art models including BERT, GPT, and more.

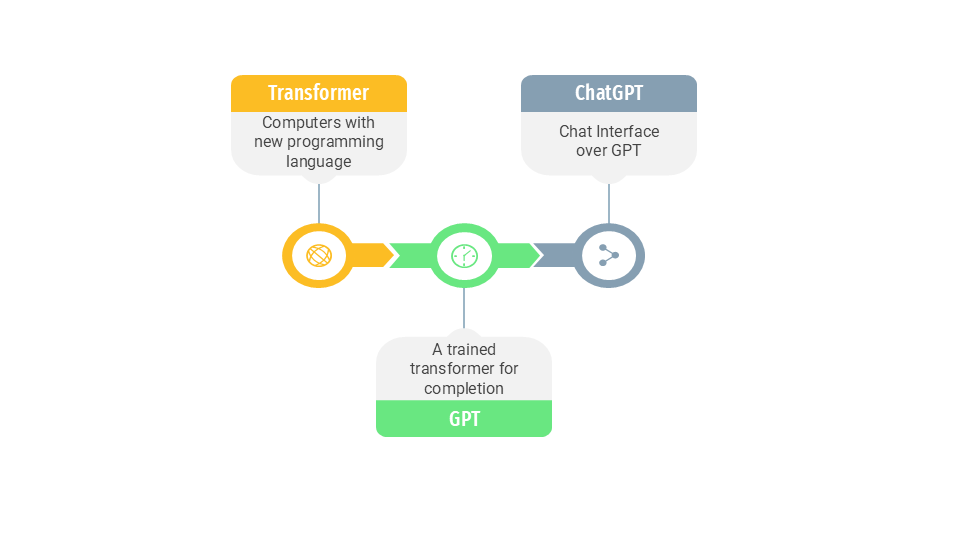

Transformers: Think of them as a computer with a new programming language and that language is English. It can transform sentences and words into something that a computer can understand. They were initially meant for translation but later moved towards completion tasks. Fundamentally we were talking with computers via code. Code needs to be very specific about what needs to be done (e.g. Python code). If we miss anything like syntax then the computer will not understand. Now, we have a way to communicate with the computer in a language such as English.

Pre-trained-transformers: When we add data to these transformers and train them it becomes a trained transformer.

So, now back to LLM. Large language models are a type of computer program that is trained on a lot of data. It learns from them and then understands and generates human-like text. Transformers are the foundational architecture that has been widely used to build Large Language Models.

GPT (Generative Pre-Trained Transformers): The Powerhouse of Generative AI

It is a family of language models which is based on transformer architecture. It is pre-trained on large amounts of text data (like articles, websites, etc.) without any specific task in mind. This helps the model learn facts about the world, reasoning abilities, and even some level of common sense. As it is a generative model, it can generate human-like text.

ChatGPT: Transforming Conversations with AI

A prompt interface is wrapped around GPT. This gives a platform to simulate conversations between humans and AI. So, it is a completion agent GPT wrapped with a chat interface.

So, to summarize,

Conclusion

The evolution of GenAI has revolutionized the way we interact with technology. As we continue to explore the potential of generative AI, it is crucial to consider the ethical implications and ensure responsible usage. We are on the brink of an era where human-computer interaction becomes more intuitive and intelligent than ever before.

References

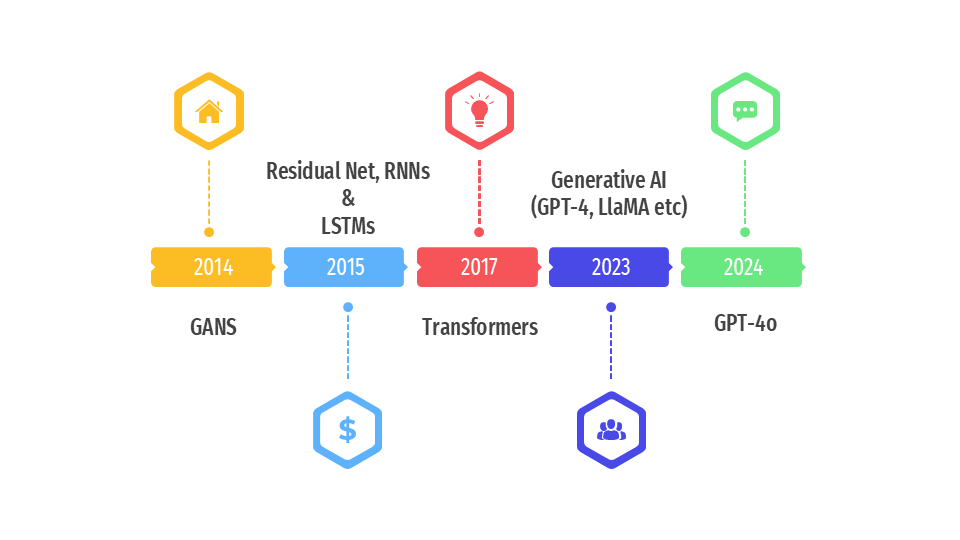

The rise of generative AI: A timeline of breakthrough innovations